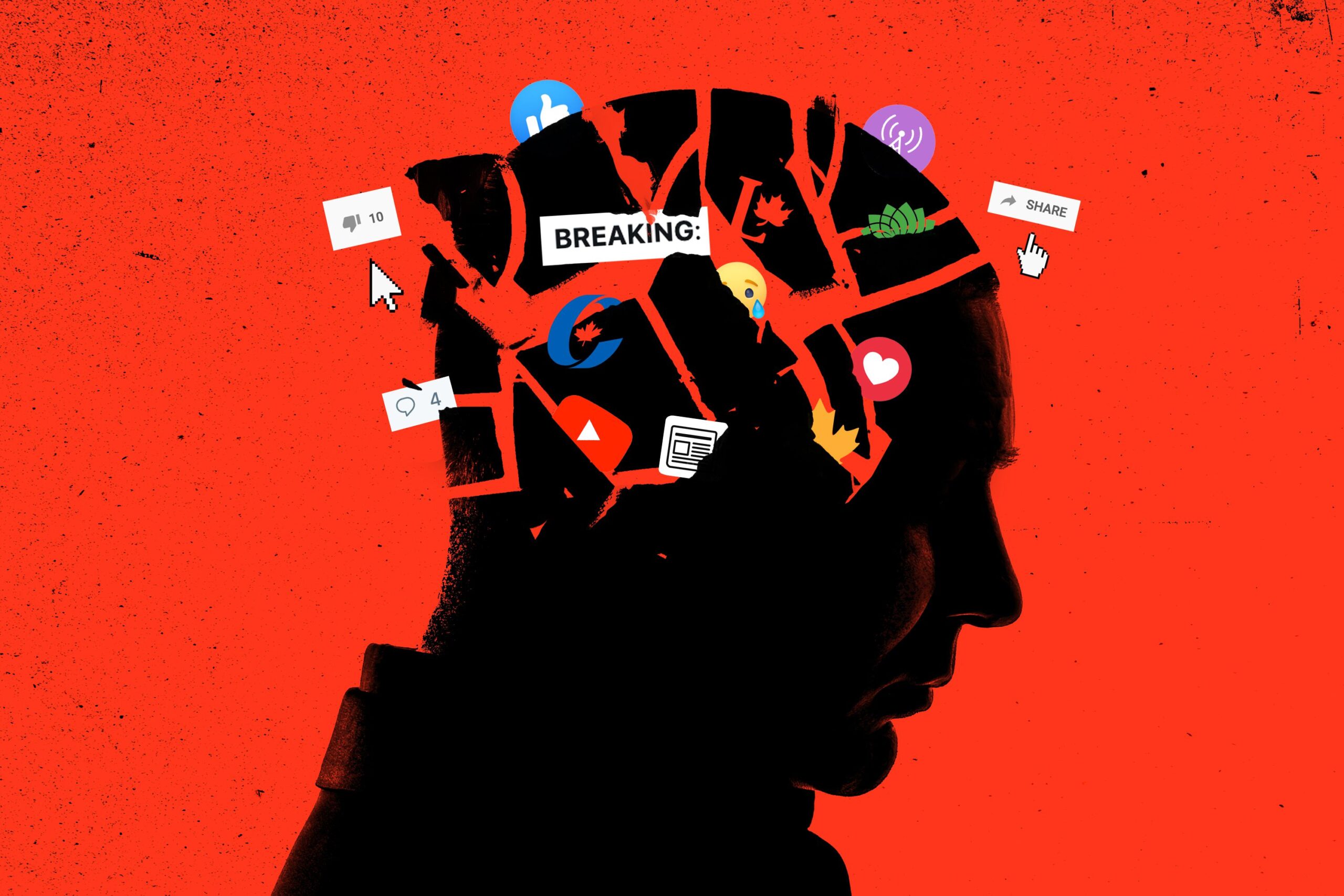

Billions of monthly active users of social media are capable of posting something inflammatory online. This is an overwhelming amount of information for manual fact-checkers. © The Globe and Mail

Prof. Sam Lehman-Wilzig – A.I. (Dis)Information: Good News for Serious Media

Yesterday’s Tora reading centers on the twelve spies sent to Canaan to ferret out who and what that land is all about. Ten of them returned with exaggerated, if not outright specious reports – not to mention cowardly “analysis” of what it all means. That’s a good springboard for discussing today’s “news distortions” – except this time it’s not humans doing the “slanting” but rather our creation: Artificial Intelligence.

As you read any newspaper how do you know that the articles (or even commentary like mine here!) are not a product of an Artificial Intelligence algorithm/bot/program? These have sprouted recently like mushrooms after the rain shower e.g., ChatGPT4 (https://openai.com/product/gpt-4), and Google’s recently released BARD (https://bard.google.com)

The problem, however, is far worse: how can you be sure that the information provided is factually true and not fabricated or seriously distorted by the AI bot a/k/a an “AI hallucination.” If you don’t believe me, then listen to the “Godfather” of AI, Geoffrey Hinton, who recently resigned from Google because of his worry that the AI arms race is bringing on an internet that will be so overwhelmed by fake images and text that in the very near future no one will know what is true anymore.

Indeed, major newspapers and magazines have already started publishing AI-written pieces, without publicizing that fact – and readers do not seem able to distinguish the human-written from the AI-created news items, commentaries, background reports, etc. Moreover, with such programs such as DALL-E2 (https://openai.com/product/dall-e-2), “an AI system that can create realistic images and art from a description in natural language,” even the “photos” or illustrations might not be authentic!

Want more? AI “voice generators” are now easily available, in two versions. Text to Speech (TtS) uses texts in order to create synthetic speech, based on predeveloped voices; Speech to Speech (StS), uses audio of a person’s real voice to create a specific, custom-made voice. These can be transformed into voices of different genders, accents, and languages. That audio news item you just listened to? It might not have been the voice of a human being….

It goes without saying (pun intended) that all these AI technologies entail a huge headache for the news world as we once knew it. (I’m not even referring to the fact that even more journalism jobs could be lost – a problem for the profession in and of itself.) The main problem is disinformation – from two different sources.

First, the “hallucination” issue mentioned above. These AI programs create their “product” from the billions of texts found on the internet. As we all know, not everything there is “true” (understatement!). Thus, based on such incorrect information, the AI spews out similar garbage. That’s no different from teaching a child that the Earth is flat. At first, she will spout that fact with great confidence; eventually, as she obtains other information about the Earth, she will slowly change her stance. An AI bot will eventually “correct” itself, but by then some informational damage has already been done – because, unlike a child who we know isn’t fully developed intellectually, the AI acts as an adult human “whom” we aren’t aware of its real (childlike) nature.

Second, and more insidious, is human-directed AI misinformation. Let’s say that newspaper X is a straight shooter but doesn’t have a very selective approach to its news sources. Just because the editorial staff wishes to deliver “just the facts,” doesn’t mean that they will succeed if their sources are AI bots manipulated by humans bent on informational mischief. Governments, especially, are interested in promulgating such disinformation campaigns (as Putin was accused of doing in the 2016 U.S. elections; and is certainly behind its current Ukraine War false propaganda). The problem here, of course, is that such “news” comes unsourced – or falsely sourced – and even newspapers with the best intentions can fall for the fake.

What does this bode for the future of news? Paradoxically, in the long run it should actually help the most responsible news purveyors. “Responsible” in this context is a newspaper that invests resources in checking its sources. For example, an alleged audio clip of the prime minister lambasting his coalition partners in a private conversation has to be checked technologically (there are anti-AI algorithms for this), and personally with all the purported attendees. Journalistic AI responsibility also entails the news site explicitly noting which items were AI-generated – not just what was done to ensure the content’s veracity.

As news consumers become more and more inundated by AI-created news reports, and questions regarding AI truth-telling increase in number, positive opportunities for the best and most “secure” news purveyors will increase as well. Of course, there are a not insignificant number of news consumers who relish false, invented, conspiracy-oriented “news” – for them the new AI news world will be a Garden of Eden with lots of Trees of (Un)Knowledge. But the more serious news sites should actually flourish in this brave new world of AI-news – on condition that they do their utmost to ensure that what they offer has been deeply vetted, factually sourced, and with complete transparency as to what was produced by whom (humans) and what (AI). Precisely because the average news consumer cannot be expected to differentiate between the two, reliance on those who can – their news sources – offers the latter a once-in-a-lifetime chance to revive trust in the (responsible) media.

Let’s just hope that, unlike the Children of Israel, we don’t have to wait 40 years to arrive at our informational Promised Land.

Latest Blogs from Prof. Sam Lehman-Wilzig